Responsible AI Governance: Future Proofing Corporate Governance, Strategy, Risk Management and Reporting

With the introduction of any new technology, its governance as much as its implementation will determine the impact it will have on a business and wider society. Regulator and investor interest in artificial intelligence (AI) is growing but still in its early stages, which means businesses bear the responsibility of establishing the guidelines that dictate the use of AI in their operations and by their workforce. While the effect of AI may not be immediately apparent, its profound implications on society and business operations demands attention.

However, despite the increasing consensus on the importance of addressing these risks, the absence of an established framework presents a challenge for businesses seeking to develop an effective governance structure. The recent upheaval at OpenAI, leading to the temporary departure of the company’s CEO, spotlights the importance of stronger governance structures in navigating the complexities of AI.

In this context, we advocate for a proactive approach to AI governance, drawing parallels with environmental, social and governance (ESG) frameworks that offer valuable tools for managing AI-related risks. We provide practical advice to business leaders looking to develop a robust governance framework and make a strong commitment to responsible AI with our Responsible AI Principles, Key Questions and Five-Step Action Plan. Our paper starts with a review of AI’s opportunities and risks, the regulatory environment and investor practices. However, for those already familiar with this landscape, our recommended approach starts page 20.

Our analysis of the regulatory environment, including a deep dive into the European Union (EU) AI Act and the United States (US) Risk Management Framework, shows the growing recognition that AI systems need to be governed. Certain legislators – the EU, Brazil and Canada – opt for binding obligations to safeguard stakeholders while others – the US, the United Kingdom (UK) and Japan – have focused on softer regulatory approaches to encourage innovation. Yet, their regulatory initiatives all broadly revolve around core principles of security, transparency and accountability. Our review also provides a table summarising regulatory initiatives based on an extended set of 10 Responsible AI Principles, that may provide businesses with guidance as they build robust AI policies. These principles are briefly described below and more detail can be found on page 14.

- Data Governance: establish policies, procedures and standards for the collection, management, and utilisation of data.

- Traceability/Record-keeping: ensure AI developers/engineers trace and maintain records of data sources, algorithms and decision-making processes.

- Explainability: aim for the explainability of AI systems, which involves their capacity to provide clear and comprehensive explanations for their decisions and actions.

- Accountability/Human Oversight: assign responsibility for AI systems’ behaviour and decisions.

- AI Literacy: ensure employees and relevant individuals possess adequate AI literacy, considering their technical expertise, experience, education, training, and the specific AI system’s context and end-users.

- Accuracy/Fairness: assess the precision and correctness of AI predictions and decisions, ensuring they align with the desired objectives and mitigate bias.

- Transparency: make AI systems visible and understandable to the relevant stakeholders (including the users) to foster trust and accountability.

- Security, Safety, and Robustness: establish measures to protect AI systems from unauthorised access, cyber threats and ensure their security and safety in operation.

- Data Protection: safeguard the privacy and security of the data used by AI systems.

- Contestability: provide individuals or entities affected by AI with the opportunity to raise concerns under the relevant complaint process.

In line with regulatory initiatives, a number of investors are starting to encourage companies to develop responsible AI governance frameworks. Norges Bank Investment Management (NBIM), who manages assets exceeding $1.4 trillion, has laid out its expectations for portfolio companies, focusing on provisions related to board accountability, transparency and explainability, alongside robust risk management. The Collective Impact Coalition for Digital Inclusion, an investor group managing $6.9 trillion, concentrates on engaging with technology companies, asking them to disclose their commitment to principles of ethical AI. In the US, five entertainment companies and one technology giant have also been targeted by shareholder proposals asking them to clarify AI related risks and how they intended to manage these risks. Collectively, this nascent investor push suggests that companies should prepare for greater engagement with shareholders on AI-related risks.

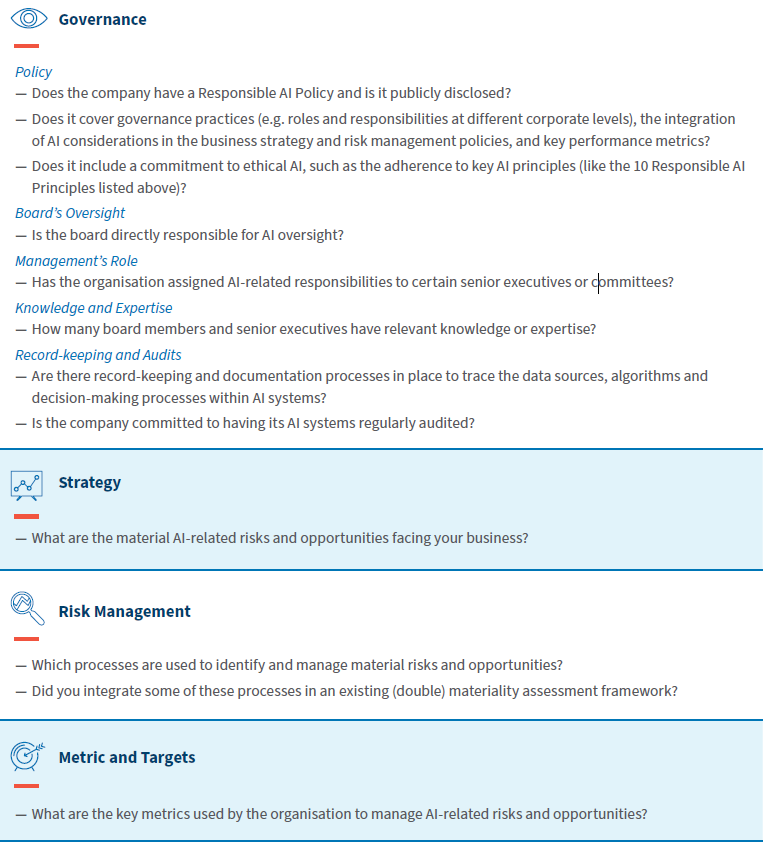

Investors are becoming increasingly familiar with existing ESG disclosure frameworks, such as the Taskforce on Climate-related Financial Disclosure (TCFD) and the Taskforce on Nature-related Financial Disclosure (TNFD), and we believe they provide an effective way to communicate a company’s approach towards managing AI-related risks and opportunities. Therefore, in alignment with the four-pillar structure of these frameworks, we provide a set of questions designed to guide companies in disclosing and effectively demonstrating their commitment to responsible AI. We show a subset of these Key Questions below, with the full list set out on page 21.

In addition to our Key Questions, we provide a Five-Step Action Plan, aimed at building a credible commitment to responsible AI. The process, summarised below, should be viewed as a loop intending to improve company practices at each iteration. More detail is included on page 23.

Step 1: Develop an overarching AI policy that sets the guardrails of how it should be used based on our Key Questions and Responsible AI Principles.

Step 2: Ensure the board, management and employees possess the requisite levels of skills and understanding of AI systems and their inherent risks.

Step 3: Report on the implementation of the policy and demonstrate progress in the company’s management of AI-related risks and opportunities.

Step 4: Include the outcomes of independent third-party audits of AI systems in annual reporting to further strengthen signals to the market about the firm’s commitment to responsible AI practices.

Step 5: Get involved in best-practices sharing at industry-level to ensure policies and processes are up to date and on par with peers.

In conclusion, the paper highlights that the tremendous benefits brought by new AI technologies come with significant risks for businesses and society. Regulatory frameworks are emerging and investors are starting to set their own expectations for their portfolio companies. With our 10 Responsible AI Principles, Key Questions, and Five-Step Action Plan, our objective is to help businesses navigate this rapidly evolving landscape. These tools will provide support to protect business operations, reduce reputational and litigation risks and allow firms to more confidently capture the opportunities offered by AI.